Deploying a scalable PostgreSQL cluster on DigitalOcean Kubernetes using Kubegres

This was done for the DigitalOcean Kubernetes Challenge!

Disclaimer: This isn't an intro to Kubernetes! Some working knowledge is required, but honestly not a lot. It's also assumed that you have kubectl installed already.

Brief overview

When deploying a database on Kubernetes, you have to make it redundant and scalable. You can rely on database management operators like KubeDB or database-specific solutions like Kubegres for PostgreSQL or the MySQL Operator for MySQL.

The challenge prompt says it all. I had barely done anything stateful with Kubernetes before, so I was a bit intimidated. Thankfully, Kubegres was very easy to set up. All you have to do is apply some manifests and it'll handle replication, failover, and backups for you. We really only scratch the surface here, so check out their site for more details. Massive props to the contributors!

The steps

- Set up a cluster using the GUI

- Connect to your cluster using doctl

- Apply Kubegres manifests

- Done! Delete some pods

for funto test replica promotion - Cleanup

Simple, right?

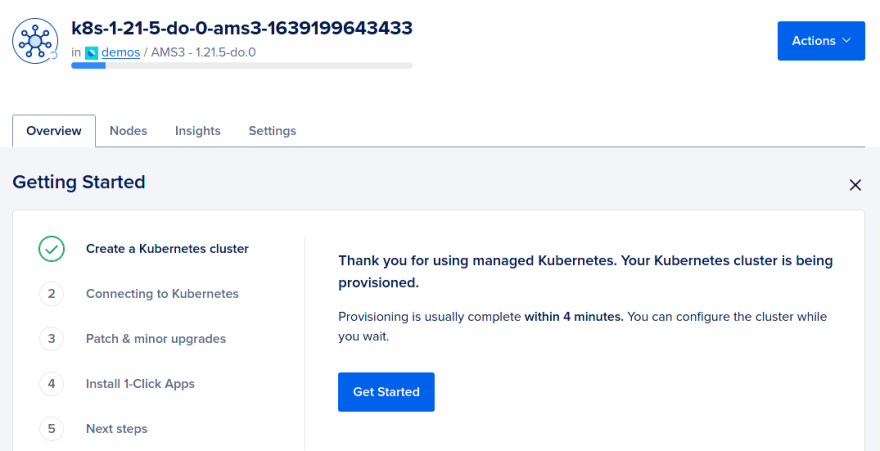

1. Set up a cluster using the GUI

I had never used DigitalOcean before, so I opted to use the GUI the first time around.

1.1. In your control panel, click the Create button at the top of the screen and select Kubernetes.

1.2. Customize your cluster then click Create Cluster at the bottom of the page. As for me, I just left everything on default :D

That also means the cluster will be associated with my default project (every user has one) and made in the default VPC of the default region.

1.3. You should be taken to your cluster's page. Provisioning should be done in a few minutes.

2. Connect to your cluster using doctl

Once the cluster is up, the Getting Started section on your cluster's page should, well, get you started!

2.1. Install and configure doctl, DigitalOcean's CLI, if you haven't yet. Here's a guide. You'll have to create a personal access token as well.

2.2. Once you've verified that doctl is working, run the command given on your cluster's Getting Started section to connect to your cluster.

You should get something like:

Notice: Adding cluster credentials to kubeconfig file found in "/your/kube/config/path"

Notice: Setting current-context to <your-cluster-id>

2.3. Confirm that you have access to your cluster.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

my-node-pool-asdfjkl Ready <none> 10m v1.21.5

my-node-pool-asdfjkm Ready <none> 10m v1.21.5

my-node-pool-asdfjkn Ready <none> 10m v1.21.5

3. Apply Kubegres manifests

Here, the Kubegres getting started guide takes over. Still very straightforward. I'll give the condensed version.

3.1. Install the Kubegres operator and check its components in the created namespace.

$ kubectl apply -f https://raw.githubusercontent.com/reactive-tech/kubegres/v1.14/kubegres.yaml

$ kubectl get all -n kubegres-system

3.2. Check the storage class. If you did everything correctly, DigitalOcean Block Storage should be the default for your cluster.

$ kubectl get sc

NAME PROVISIONER ...

do-block-storage (default) dobs.csi.digitalocean.com ...

3.3. Create a file for your Postgres superuser and replication user credentials.

$ vi my-postgres-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: mypostgres-secret

namespace: default

type: Opaque

stringData:

superUserPassword: postgresSuperUserPsw

replicationUserPassword: postgresReplicaPsw

The keys and values under stringData are arbitrary, but we'll use these for now.

3.4. Create the secret in your cluster

$ kubectl apply -f my-postgres-secret.yaml

3.5. Create a file for your Kubegres resource:

$ vi my-postgres.yaml

apiVersion: kubegres.reactive-tech.io/v1

kind: Kubegres

metadata:

name: mypostgres

namespace: default

spec:

replicas: 3

image: postgres:14.1

database:

size: 1Gi

env:

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: mypostgres-secret

key: superUserPassword

- name: POSTGRES_REPLICATION_PASSWORD

valueFrom:

secretKeyRef:

name: mypostgres-secret

key: replicationUserPassword

The Kubegres tutorial uses 200Mi for the database size but the minimum block storage size provisioned by DigitalOcean is 1Gi. This bit me the first time around :^)

3.6. Apply using

$ kubectl apply -f my-postgres.yaml

and watch K8s spin up the pods with

$ kubectl get pods -o wide -w

3.7. If it seems like something went wrong, run

$ kubectl get events

to get more information.

4. Done!

4.1. Check all the created resources with

$ kubectl get pod,statefulset,svc,configmap,pv,pvc -o wide

You should get something like:

NAME READY STATUS NODE

pod/mypostgres-1-0 1/1 Running worker1

pod/mypostgres-2-0 1/1 Running worker2

pod/mypostgres-3-0 1/1 Running worker3

NAME READY

statefulset.apps/mypostgres-1 1/1

statefulset.apps/mypostgres-2 1/1

statefulset.apps/mypostgres-3 1/1

NAME TYPE

service/mypostgres ClusterIP

service/mypostgres-replica ClusterIP

NAME

configmap/base-kubegres-config

NAME CAPACITY

persistentvolume/pvc-838... 1Gi

persistentvolume/pvc-da6... 1Gi

persistentvolume/pvc-e25... 1Gi

NAME CAPACITY

persistentvolumeclaim/postgres-db-mypostgres-1-0 1Gi

persistentvolumeclaim/postgres-db-mypostgres-2-0 1Gi

persistentvolumeclaim/postgres-db-mypostgres-3-0 1Gi

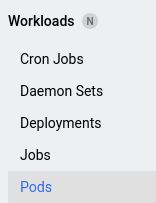

4.2. Check the dashboard! Go to your cluster's page and click Kubernetes Dashboard to the right of the cluster's name. It's pretty comprehensive!

Look at your pods by clicking Pods in the sidebar, under Workloads. Find the primary Postgres pod using the labels on each pod's page.

4.3. Back to the terminal! Check which pod is primary with

$ kubectl get pods --selector replicationRole=primary

NAME READY STATUS RESTARTS AGE

mypostgres-1-0 1/1 Running 0 3m21s

Check which ones are replicas with

$ kubectl get pods --selector replicationRole=replica

NAME READY STATUS RESTARTS AGE

mypostgres-2-0 1/1 Running 0 2m59s

mypostgres-3-0 1/1 Running 0 2m19s

4.4. Delete the primary pod with

$ kubectl delete pod <pod-name>

and watch as a replica gets promoted with

$ kubectl get pods -w --selector replicationRole=primary

Blink and you'll miss it!

4.5. Recheck the primary and replica pods with commands from previous steps.

$ kubectl get pods --selector replicationRole=primary

NAME READY STATUS RESTARTS AGE

mypostgres-2-0 1/1 Running 0 51s

$ kubectl get pods --selector replicationRole=replica

NAME READY STATUS RESTARTS AGE

mypostgres-3-0 1/1 Running 0 4m21s

mypostgres-4-0 1/1 Running 0 34s

The king is dead, long live the king!

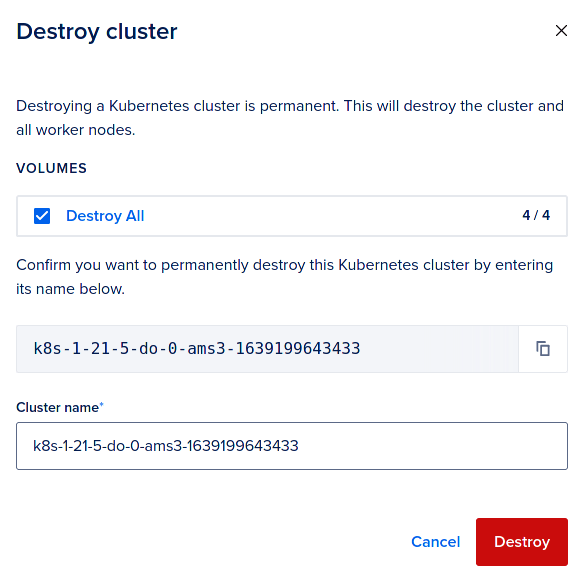

5. Cleanup

We wouldn't want to rack up a huge bill, would we?

5.1. Go to your cluster's page and select the Settings tab.

5.2. Scroll down to the very bottom and click the red Destroy button.

5.3. You'll be asked if you want to destroy the persistent volumes provisioned along with your cluster. Select Destroy All, enter the cluster's name, and click the red Destroy button.

Thank you!

And that's it! Thank you for reading my first post on dev.to :) And thank you to the folks at DigitalOcean for organizing the challenge and inspiring me to get off my butt!

In my next post I'll demonstrate how to do the same deployment using Terraform and GitHub Actions! Check out the repo in the meantime.