Part IV: Telegram notifications

When we have all the monitoring component in the place we might be wondering how to receive notification when something went wrong. Good question.

Alertmanager does the trick and it natively supports all the major channels - Slack, PagerDuty, OpsGenie and more. But it does not support Telegram which is kinda popular even for the business communication in smaller companies.

Let's fix it with a few AWS resources and simple Go lambda function.

SNS

SNS is one of the supported Alermanager's channels. It's actually very convenient in this case since we have the IAM role attached to instance - we don't need to hassle with credentials.

Let's dive into Terraform again.

resource "aws_sns_topic" "prometheus_alerts" {

name = "prometheus_alerts"

}

SQS

The next part is the queue, we can possibly connect a lambda directly do SNS but SQS gives us more reliability. SQS will effectively become the only SNS subscriber and messages will be queued for the further processing.

resource "aws_sqs_queue" "prometheus_alerts" {

name = "prometheus_alerts"

}

And since SNS topic will be pushing messages to this queue - we also need to allow this:

resource "aws_sqs_queue_policy" "prometheus_alerts" {

queue_url = aws_sqs_queue.prometheus_alerts.id

policy = <<POLICY

{

"Version": "2012-10-17",

"Id": "sqspolicy",

"Statement": [

{

"Sid": "First",

"Effect": "Allow",

"Principal": "*",

"Action": "sqs:SendMessage",

"Resource": "${aws_sqs_queue.prometheus_alerts.arn}",

"Condition": {

"ArnEquals": {

"aws:SourceArn": "${aws_sns_topic.prometheus_alerts.arn}"

}

}

}

]

}

POLICY

}

Also, create the subscription:

resource "aws_sns_topic_subscription" "prometheus_alerts" {

topic_arn = aws_sns_topic.prometheus_alerts.arn

protocol = "sqs"

endpoint = aws_sqs_queue.prometheus_alerts.arn

}

Lambda function

Here we go again, Lambda needs role, permissions.. so let's create these resources first.

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}

resource "aws_iam_role" "alertmanager_notify" {

name = "alertmanager_notify"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Principal = {

Service = "lambda.amazonaws.com"

}

Action = "sts:AssumeRole"

}

]

})

}

resource "aws_iam_policy" "alertmanager_notify" {

name = "alertmanager_notify"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"logs:CreateLogGroup",

]

Resource = [

"arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/lambda/alertmanager_notify",

]

},

{

Effect = "Allow"

Action = [

"logs:CreateLogStream",

"logs:PutLogEvents",

]

Resource = [

"arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/lambda/alertmanager_notify:*",

]

},

{

"Effect": "Allow",

"Action": [

"sqs:DeleteMessage",

"sqs:GetQueueAttributes",

"sqs:ReceiveMessage",

],

"Resource": [

aws_sqs_queue.prometheus_alerts.arn,

]

},

]

})

}

resource "aws_iam_role_policy_attachment" "alertmanager_notify" {

role = aws_iam_role.alertmanager_notify.name

policy_arn = aws_iam_policy.alertmanager_notify.arn

}

With such permissions, Lambda function is able to process messages from the SQS queue and delete them when processed. Cloudwatch part is pretty self-descriptive - we want to see some logs. Lambda function definition itself is straightforward:

resource "aws_lambda_function" "alertmanager_notify" {

filename = "${path.module}/assets/function.zip"

source_code_hash = filebase64sha256("${path.module}/assets/function.zip")

function_name = "alertmanager_notify"

role = aws_iam_role.this.arn

handler = "main"

runtime = "go1.x"

lifecycle {

ignore_changes = [

filename,

last_modified,

source_code_hash,

]

}

environment {

variables = {

TELEGRAM_TOKEN = <telegram token from bot father>

TELEGRAM_CHANNEL = <telegram group id>

}

}

}

Note the ${path.module}/assets/function.zip, this is a zip file with some dummy code. We only need this archive for the initial creation. The final code will be pushed to the AWS externally.

Lambda code

For the integration with Telegram we're gonna use github.com/go-telegram-bot-api/telegram-bot-api library. It's just thin wrapper over the Telegram API and it's sufficient for this use case.

Please note that signatures of

handleandsendMessageshould ideally contain interface, some sort ofTelegramSenderor so. But for the sake of simplicity we useBotAPIdirectly 😊

package main

import (

"context"

"fmt"

"github.com/aws/aws-lambda-go/events"

"github.com/aws/aws-lambda-go/lambda"

tgbotapi "github.com/go-telegram-bot-api/telegram-bot-api"

log "github.com/sirupsen/logrus"

"os"

"strconv"

)

var (

telegramToken = os.Getenv("TELEGRAM_TOKEN")

telegramChannel = os.Getenv("TELEGRAM_CHANNEL")

parseMode = "HTML"

)

func init() {

log.SetFormatter(&log.JSONFormatter{})

}

func sendMessage(bot *tgbotapi.BotAPI, channel int64, message string, mode string) error {

log.Info("sending notification to Telegram")

msg := tgbotapi.NewMessage(channel, message)

msg.ParseMode = mode

_, err := bot.Send(msg)

if err != nil {

return fmt.Errorf("could not send message: %s", err)

}

return nil

}

func handle(bot *tgbotapi.BotAPI, channel int64) func(context.Context, events.SQSEvent) error {

return func(ctx context.Context, event events.SQSEvent) error {

for _, record := range event.Records {

log.

WithField("message_id", record.MessageId).

Info("processing SQS record")

err := sendMessage(bot, channel, record.Body, parseMode)

if err != nil {

log.Fatalf("could process message: %s", err)

}

}

return nil

}

}

func main() {

// create telegram client

bot, err := tgbotapi.NewBotAPI(telegramToken)

if err != nil {

log.Fatalf("could not create telegram client")

}

// parse

telegramChannelInt, err := strconv.ParseInt(telegramChannel, 10, 64)

if err != nil {

log.Fatalf("could not parse channel id")

}

lambda.Start(handle(bot, telegramChannelInt))

}

Let's deploy the function!

GOARCH=amd64 GOOS=linux go build main.go

zip function.zip main

aws lambda update-function-code --function-name alertmanager_notify --zip-file fileb://./function.zip

Alertmanager configuration

First of all, we need to allow interaction with SNS topic for the instance where the Alertmanager is running. Add this statement to the IAM policy from the first chapter:

{

Effect = "Allow"

Action = [

"sns:Publish",

]

Resource = [

aws_sns_topic.prometheus_alerts.arn,

]

},

The last bit is yaml for the Alertmanager. This is perhaps the simplest configuration:

global:

resolve_timeout: 1m

receivers:

- name: sns

sns_configs:

- message: |

{{ if eq .Status "firing" }}🔥 {{ end }}{{ if eq .Status "resolved" }}✅ {{ end }}[{{ .Status | toUpper }}] {{ .CommonLabels.alertname }}

{{ range .Alerts }}

<b>Alert:</b> {{ .Annotations.title }}{{ if .Labels.severity }} - `{{ .Labels.severity }}`{{ end }}

<b>Description:</b> {{ .Annotations.description }}

<b>Details:</b>

{{ range .Labels.SortedPairs }}- {{ .Name }}: <i>{{ .Value }}</i>

{{ end }}

{{ end }}

sigv4:

region: eu-west-1

topic_arn: <SNS topic ARN>

route:

group_by:

- "..."

group_interval: 30s

group_wait: 5s

receiver: sns

repeat_interval: 3h

Result

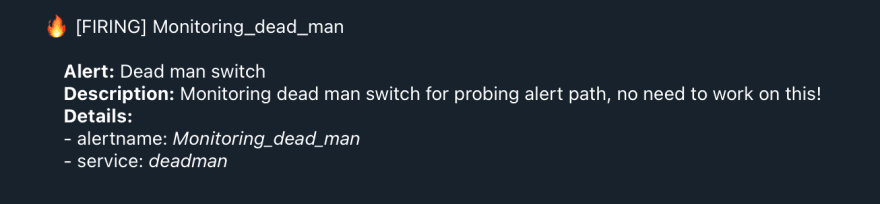

Now we're able to receive alerts in the Telegram group, see the following example that comes from the similar configuration. Pretty neat, huh?

Wrap

And this is the end of this series. I've been working on this setup in past two months and I must say I'm pretty happy with the overall result.

Here's also one final disclaimer - do not forget to establish external monitoring for the Prometheus. Expose readiness server's probe to the internet and use tools such uptime robot because you really want to know when Prometheus goes down.

Do you have any questions? Ping me on twitter or ask it directly here. I'll try to do my best to help you.